TL;DR:

AI isn’t a toy on the sidelines—it’s already in blockbuster pipelines (de-aging, synthetic voices, AI-generated title sequences) and indie toolkits (text-to-video, auto-roto, speech cleanup). The shocker: the biggest ROI is not replacing artists, but giving small teams “studio-scale” superpowers—fewer bottlenecks, faster iteration, and more creative options.

Table of Contents

- What AI actually does in a film/VFX pipeline

- Case studies you can point to in meetings

- ROI math: where time/money are really saved

- Two practical playbooks (indie & studio)

- Apps & sites to use—today

- Risks, ethics & compliance (with guardrails)

- Quick glossary & SEO keyword pack

- FAQ & “where this is going next”

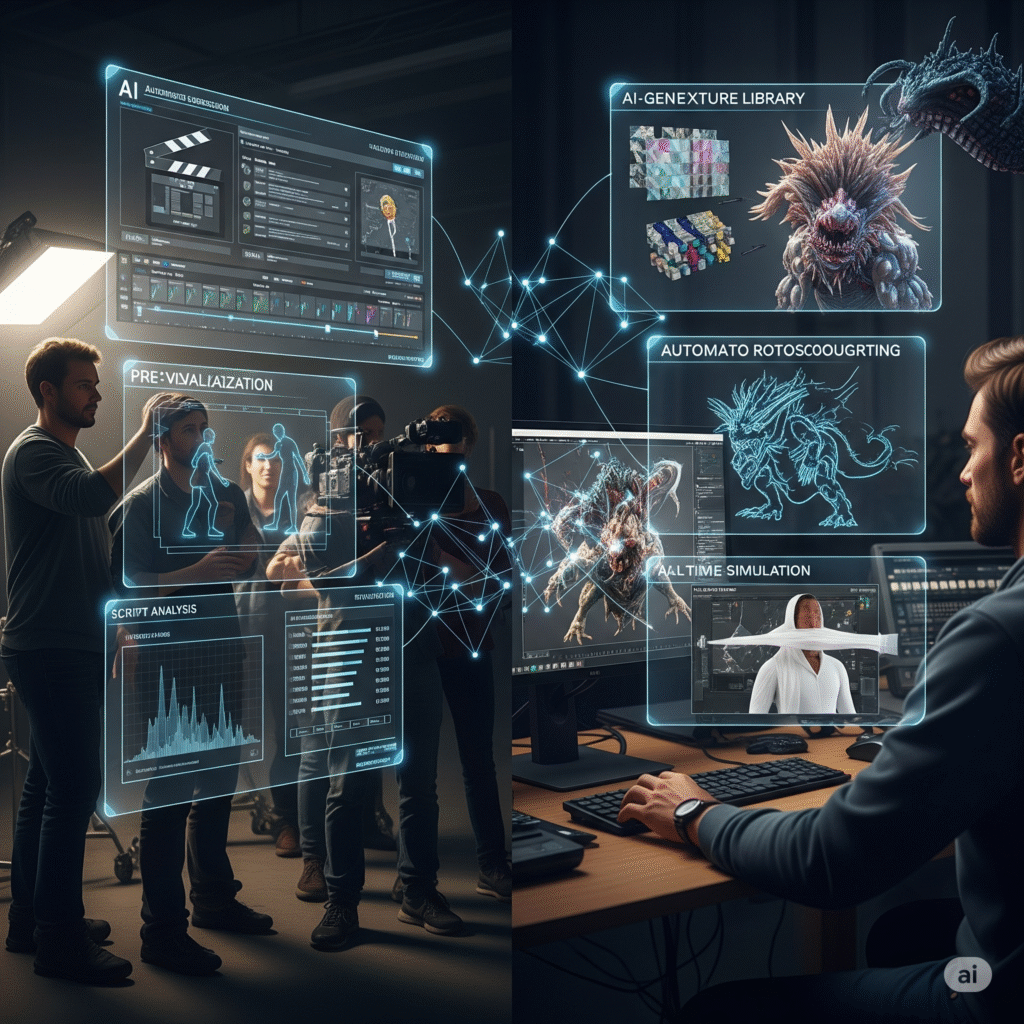

1) What AI actually does in a film/VFX pipeline

“AI doesn’t replace taste; it compresses the time between ideas and images.”

| Pipeline Stage | What AI Does Well | Typical Tools |

|---|---|---|

| Development | Script coverage, audience fit, comps, pitch visuals | StoryFit, Cinelytic (greenlight analytics), Midjourney, Firefly |

| Pre-vis & Storyboarding | Fast boards, animatics, camera/lighting previs, virtual scouting | Unreal Engine + MetaHuman, Runway Gen-3, Luma Dream Machine |

| Production | Markerless mocap, continuity checks, live color looks, on-set cleanup notes | Wonder Studio, Move.ai/RADiCAL, iZotope RX notes, Kira for continuity |

| Post (Edit) | Text-based editing, speech-to-text, auto-transcribe/rough cut | Adobe Premiere Pro (Text-Based Editing, Enhance Speech), Descript |

| VFX/Comp | Roto, matte gen, upscaling, face work, object removal | After Effects (Roto Brush), Topaz Video AI, Nuke (CopyCat), Resolve |

| Color | Auto-isolation, face/sky keys, look matching | DaVinci Resolve (Neural Engine: Magic Mask, Relight) |

| Sound | Dialogue clean, ADR guides, language dubbing, temp score | iZotope RX, ElevenLabs/Respeecher, Auphonic |

| Marketing | Trailers, key art variations, subtitling/dubbing | Runway, Kaiber, Rev/Speechmatics, Canva + AI |

Why this matters: the “80/20” of film labor is iteration. AI collapses that loop—more tries per day—which tends to make the final cut better.

2) Case studies you can point to in meetings

A) Feature de-aging that audiences actually watched

Lucasfilm/ILM de-aged Harrison Ford for ~25 minutes in Indiana Jones and the Dial of Destiny using a blend of ML, archival footage search, and proprietary capture (Flux) plus traditional VFX. It wasn’t “just a filter,” it was AI + artistry—and it shipped to cinemas worldwide. (WIRED, Allure, Wikipedia, Motion Picture Association)

B) Synthetic voices in major franchises

Respeecher’s neural speech synthesis recreated younger Luke Skywalker’s voice and supported Darth Vader’s voice in Obi-Wan Kenobi—with consent and supervision—showing AI’s role in performance continuity. (Vanity Fair, Respeecher)

C) AI-generated title design on a Disney+ series

Secret Invasion’s opening titles used generative AI (with Method Studios) and sparked industry debate on where AI belongs in credits. Regardless of opinion, this is already in professional pipelines. (Polygon, The Washington Post, Wikipedia)

D) Text-to-video as real pre-vis (today)

Runway’s Gen-3 Alpha and Luma’s Dream Machine have enabled teams to ideate motion, lighting, and blocking from text/image prompts—useful for look-dev and boards. (Runway, Luma AI)

3) ROI math: where time/money are really saved

| Task | Old World | With AI | Realistic Win |

|---|---|---|---|

| Rotoscoping 10 shots | 2–4 days junior artist | Hours w/ AI matte + clean-up | 2×–5× faster |

| Dialogue cleanup (noisy set) | ADR + RX lab time | On-editor “Enhance Speech” + RX polish | Saves ADR trips |

| Pre-vis pass on action beat | 1–2 weeks | 1–2 days w/ text-to-video + Unreal | More iterations |

| Upres archival or stock | License again or reshoot | Topaz Video AI upscale | Budget saved |

These are conservative, observed ranges in real shops; your mileage varies with shot complexity and supervision quality.

4) Two practical playbooks you can start using now

A) Indie creator / small studio (5–20 people)

- Board → Look Dev: Block the scene with Runway Gen-3 or Luma Dream Machine variations; lock tone, color motifs, and lensing ideas. (Runway, Luma AI)

- Cut faster: Import selects to Premiere Pro, use Text-Based Editing and Enhance Speech for temp audio. (Blackmagic Design)

- Auto-mattes first: Generate rough mattes with After Effects Roto Brush/AI; finish edges by hand. (Fewer “trash frames”.) (Blackmagic Design)

- Lift the image: Topaz Video AI for upscaling/denoise; conform back to your timeline. (Topaz Labs)

- Polish + color: Resolve Neural Engine for Magic Mask/Relight; final grade in Resolve. (Blackmagic Design)

- Sound pass: iZotope RX for clicks/room tone; Auphonic for loudness; optional guide ADR with ElevenLabs (with permission).

Result: you keep creative control, but cut days from roto, cleanup, and temp audio.

B) Studio / agency team (50+ people)

- Ethics first: Talent AI clauses + consent workflows; watermarking/provenance (C2PA) for AI-touched shots.

- Centralized pre-vis: Build a prompt library with look-refs; route all AI outputs through shot leads for taste and continuity.

- VFX triage: Tag shots as AI-friendly (sky replacements, crowds, utility paint) vs hand-craft (hero faces, hair sims).

- Audit & archive: Store prompts, seeds, and training references for reuse and legal audit trails.

- Comms: Credit humans clearly; disclose AI assistance where policy requires.

5) Apps & websites to use (by job-to-be-done)

Pre-vis & motion ideation: Runway Gen-3, Luma Dream Machine, Kaiber, Pika

Virtual production & characters: Unreal Engine + MetaHuman, Wonder Studio, NVIDIA Omniverse

Roto, cleanup, upscaling: After Effects (Roto Brush), Topaz Video AI, DaVinci Resolve (Magic Mask/Relight) (Blackmagic Design, Topaz Labs)

Editorial & dialogue: Adobe Premiere Pro (Text-Based Editing, Enhance Speech), Descript, iZotope RX (Blackmagic Design)

Voices & ADR (with consent): Respeecher, ElevenLabs (Vanity Fair)

Analytics (dev/greenlight): StoryFit, Cinelytic (decision support)

One-liner you can steal: “Use AI for velocity, not for verdicts.” (Let data inform; let humans decide.)

6) Risks, ethics & compliance (with guardrails)

| Risk | What it looks like | Guardrail |

|---|---|---|

| Consent & likeness | De-aging/voice without explicit permission | Written consent per use, revocable terms, royalties; document provenance |

| Attribution & credits | AI replacing junior roles invisibly | Credit humans; define “AI-assisted” tags in production bible |

| Bias in datasets | Faces/voices skewed, “averaging” aesthetics | Diverse, licensed refs; human review gates |

| Continuity drift | Model outputs vary across shots | Prompt/style bible; fixed seeds; reference frames |

| Legal/IP | Training data disputes, stock T&Cs | Use enterprise plans, indemnified tools; keep an audit log of prompts/inputs |

7) Quick glossary & SEO keyword pack

Glossary (fast version):

Text-to-video – generate moving shots from text/image.

Neural rendering – ML that synthesizes photoreal frames guided by data (faces, motion, lighting).

Magic Mask / Roto AI – ML segmentation that selects subjects/regions frame-to-frame.

Virtual production – LED volume + real-time engines (Unreal) replacing greenscreen.

Target keywords to sprinkle (naturally):

ai in filmmaking, ai for vfx, neural rendering, de-aging ai, text-to-video, virtual production ai, resolve neural engine, after effects roto brush ai, runway gen-3, luma dream machine, ai sound design, ai script analysis

8) FAQ & “Where this is going”

Is text-to-video ready for final shots?

For stylized beats and quick cutaways—sometimes yes. For hero shots—usually pre-vis / look-dev today, with human finishing.

Will AI replace artists?

Great artists get more leverage. The boring parts compress; taste and direction matter more.

What should shock you:

A five-person team can now prototype a studio-grade sequence in days, not months. The winners won’t be those with the most money—it’ll be the teams who iterate the fastest without losing taste.

Sources for key claims

- ILM’s de-aging of Harrison Ford combined ML with proprietary capture and VFX craftsmanship in Dial of Destiny. (WIRED, Allure, Wikipedia)

- Respeecher’s voice synthesis supported younger Luke Skywalker/Darth Vader voices with consent workflows. (Vanity Fair, Respeecher)

- Secret Invasion used generative AI for its opening titles via Method Studios; the choice sparked industry debate. (Polygon, The Washington Post, Wikipedia)

- Runway Gen-3 Alpha and Luma Dream Machine are active options for text-to-video ideation. (Runway, Luma AI)

- Adobe Premiere’s Enhance Speech / Text-Based Editing and DaVinci Resolve’s Neural Engine features are widely used for editorial and finishing speedups. (Blackmagic Design)

- Topaz Video AI is used for upscaling/denoise in post pipelines. (Topaz Labs)

Ready-to-use daily checklist (pin this)

- Consent check for any face/voice manipulation

- Log prompts, seeds, refs into your shot ticket

- Run AI first pass, then human polish

- Compare v1/v2/v3 side-by-side; pick on taste

- Note what AI saved (time/money) right in the task—this becomes your internal ROI sheet

7 Comments

Well written and engaging. A pleasure to read from start to finish.

Thank you

I found this article quite helpful. Looking forward to more content like this.

Thank you

Well written and engaging. A pleasure to read from start to finish.

Thank you

Thanks for sharing your thoughts. Very insightful and thought-provoking.